1 Eliminating Redundant Loads and Stores

2 Eliminating Unreachable Code

3 Flow-of-Control Optimizations

4 Algebraic Simplification and Reduction in Strength

5 Use of Machine Idioms

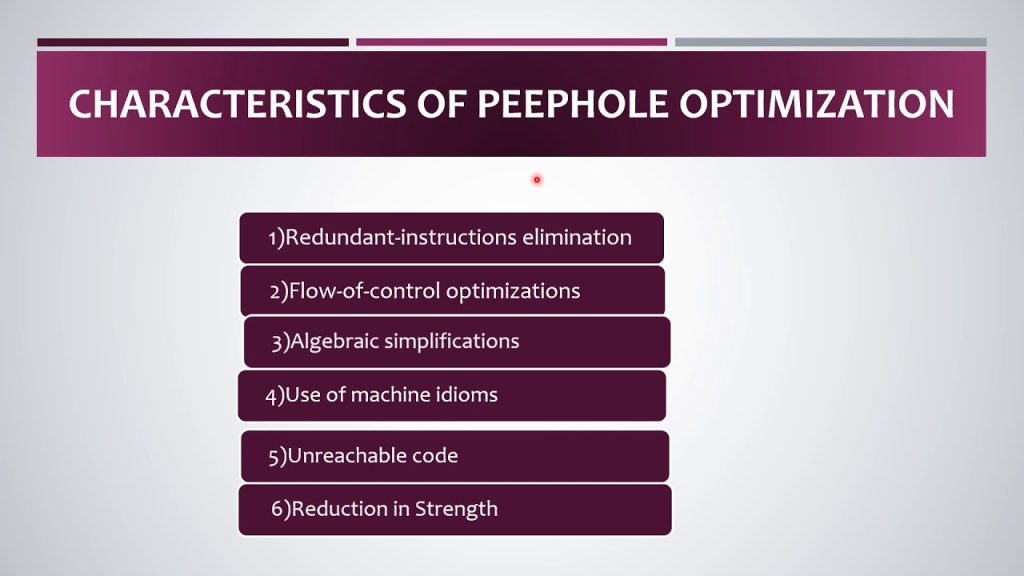

While most production compilers produce good code through careful instruc-tion selection and register allocation, a few use an alternative strategy: they generate naive code and then improve the quality of the target code by applying “optimizing” transformations to the target program. The term “optimizing” is somewhat misleading because there is no guarantee that the resulting code is optimal under any mathematical measure. Nevertheless, many simple transfor-mations can significantly improve the running time or space requirement of the target program.

A simple but effective technique for locally improving the target code is peephole optimization, which is done by examining a sliding window of target instructions (called the peephole) and replacing instruction sequences within the peephole by a shorter or faster sequence, whenever possible. Peephole optimization can also be applied directly after intermediate code generation to improve the intermediate representation.

The peephole is a small, sliding window on a program. The code in the peephole need not be contiguous, although some implementations do require this. It is characteristic of peephole optimization that each improvement may spawn opportunities for additional improvements. In general, repeated passes over the target code are necessary to get the maximum benefit. In this sec-tion, we shall give the following examples of program transformations that are characteristic of peephole optimizations:

Redundant-instruction elimination

Flow-of-control optimizations

Algebraic simplifications

Use of machine idioms

1. Eliminating Redundant Loads and Stores

If we see the instruction sequence

LD a, RO

ST RO, a

in a target program, we can delete the store instruction because whenever it is executed, the first instruction will ensure that the value of a has already been loaded into register RO. Note that if the store instruction had a label, we could not be sure that the first instruction is always executed before the second, so we could not remove the store instruction. Put another way, the two instructions have to be in the same basic block for this transformation to be safe.

Redundant loads and stores of this nature would not be generated by the simple code generation algorithm of the previous section. However, a naive code generation algorithm like the one in Section 8.1.3 would generate redundant sequences such as these.

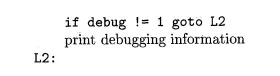

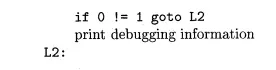

2. Eliminating Unreachable Code

Another opportunity for peephole optimization is the removal of unreachable instructions. An unlabeled instruction immediately following an unconditional jump may be removed. This operation can be repeated to eliminate a sequence of instructions. For example, for debugging purposes, a large program may have within it certain code fragments that are executed only if a variable debug is equal to 1. In the intermediate representation, this code may look like

if debug == 1 goto LI

goto L2

L I : print debugging information

L2:

One obvious peephole optimization is to eliminate jumps over jumps. Thus, no matter what the value of debug, the code sequence above can be replaced by

If debug is set to 0 at the beginning of the program, constant propagation would transform this sequence into

Now the argument of the first statement always evaluates to true, so the statement can be replaced by goto L2. Then all statements that print debug-ging information are unreachable and can be eliminated one at a time.

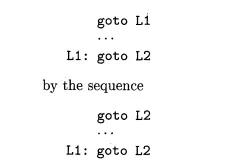

3. Flow-of-Control Optimizations

Simple intermediate code-generation algorithms frequently produce jumps to jumps, jumps to conditional jumps, or conditional jumps to jumps. These unnecessary jumps can be eliminated in either the intermediate code or the target code by the following types of peephole optimizations. We can replace the sequence

goto Li

LI: goto L2

by the sequence

goto L2

LI: goto L2

If there are now no jumps to LI, then it may be possible to eliminate the statement LI: goto L2 provided it is preceded by an unconditional jump .

Similarly, the sequence

if a < b goto LI

LI: goto L2

Can be replaced by the sequence

if a < b goto L2

LI: goto L2

Finally, suppose there is only one jump to LI and LI is preceded by an unconditional goto. Then the sequence

goto LI

LI: if a < b goto L2

L3:

may be replaced by the sequence

if a < b goto L2

goto L3

L3:

While the number of instructions in the two sequences is the same, we sometimes skip the unconditional jump in the second sequence, but never in the first. Thus, the second sequence is superior to the first in execution time,

4. Algebraic Simplification and Reduction in Strength

In Section 8.5 we discussed algebraic identities that could be used to simplify DAG’s. These algebraic identities can also be used by a peephole optimizer to eliminate three-address statements such as

x = x + 0

or

x = x * 1

in the peephole.

Similarly, reduction-in-strength transformations can be applied in the peep-hole to replace expensive operations by equivalent cheaper ones on the target machine. Certain machine instructions are considerably cheaper than others and can often be used as special cases of more expensive operators. For ex-ample, x2 is invariably cheaper to implement as x * x than as a call to an exponentiation routine. Fixed-point multiplication or division by a power of two is cheaper to implement as a shift. Floating-point division by a constant can be approximated as multiplication by a constant, which may be cheaper.

5. Use of Machine Idioms

The target machine may have hardware instructions to implement certain spe-cific operations efficiently. Detecting situations that permit the use of these instructions can reduce execution time significantly. For example, some ma-chines have auto-increment and auto-decrement addressing modes. These add or subtract one from an operand before or after using its value. The use of the modes greatly improves the quality of code when pushing or popping a stack, as in parameter passing. These modes can also be used in code for statements like x = x + 1.

Comments are closed