Alarge part of what makes computer science hard is that it can be hard to know where to start when it comes to solving a difficult, seemingly unsurmountable problem.

One of the reasons that some things can seem so tricky is that they’re multistep problems, and they involve us first understanding the problem, then considering the simplest solution, then iterating upon that solution to make it better, more efficient, and more elegant. I often think of the phrase that has been attributed toKent Beck who said, “Make it work, make it right, make it fast.”

Some of the most complex problems in computer science are complex for this very reason: they involve these three distinct parts, and it can feel super overwhelming if we don’t consider these three steps as unique points in our problem-solving strategy. The complex problems are the ones where we are forced to step back, and try to break up our problem-solving process into a segmented process, rather than trying to magically find the perfect solution in one go. To be honest, finding the perfect solution in one go rarely actually ever happens.

We’ve covered some tricky topics throughout the course of this series, but one of the more complicated topics presented itself more recently when we encounteredthe traveling salesman problem (TSP). Since we have already taken the first step of trying to find a solution to TSP that just works, we can now concern ourselves with the next steps: making it right (or more elegant), and hopefully a little bit faster.

No Fun Factorials

When we first stumbled upon the traveling salesman problem, we were dealing with a salesman who had a fairly easy task: to visit four cities in some order, as long as he visited each city once and ended up at the same city that he started in.

Now, the reason that this was an “easy” task, so to speak, was simply because of the fact that visiting four cities isn’t really a lot to do. In algorithmic terms, we were able to solve this problem and find the shortest path for our salesman using a brute-force technique, combined with recursion. We were able to determine that the brute-force approach was, by definion, a factorial algorithm. In our example, we determined that, for a salesman who needs to visit four cities would mean making 3! or “three factorial” function calls, which equals 6.

We also started realizing that the factorial runtime of the brute-force technique for solving TSP was going to be unscalable over time. In fact, we realized that it was going to be unscalable almost immediately! For example, what would happen when our traveling salesman needed to visit not just four cities, but five cities? When we were dealing with four cities, we made six recursive calls. So, adding one extra city shouldn’t be too difficult, right? After all, it’s just one city.

Well, not exactly. Here’s how our algorithm scales from just four cities, to five:

How a factorial algorithm scales from an input of 4 elements to 5 elements.

When our salesman only had to visit four cities, we made six recursive calls. But now, we have literally quadrupled our tree of “potential paths”, which seems really, really, really bad. Solving TSP for five cities means that we need to make 4! or four factorial recursive calls using the brute-force technique. As it turns out, 4! equals 24, which means we have to now make 24 recursive calls in order to accomodate just one additional city in our traveling salesman’s map.

If we compare the illustrated version of the “tree” of recursive function calls from our previous example of TSP to the one that is drawn above, we start to get a pretty good idea of just how unsustainable a factorial algorithm really is.

O(n!) runtime is unsustainable.

We have seen quite a few different forms of Big O Notation throughout this series, including the good and the bad. So, where do factorial algorithms fit into this narrative?

If constant, logarithmic, and linear time are good, and quadratic and exponential time are bad, there is only one thing left to explore: the ugly. Factorial algorithms are exactly that: the ugly.

For an algorithm that runs in factorial, or O(n!) time, any operations that need to run will end up taking n! more time in relation to the data that is being operated upon, or the input data set.

Okay, but what does this actually mean? Well, let’s look at how a factorial algorithm compares to all the other forms of Big O Notation that we’re already familiar with.

Factorial time is super slow and inefficient as input size grows

We’ll notice almost immediately that algorithms that grow in factorial time are super slow and ineffcient as input size grows. For example, we’ll see that even a slight increase in the number of elements to be operated upon by a factorial algorithm causes it to shoot up in the number of operations required to run. If we compare this to linearithmic, linear, or even just quadratic time algorithms— which are still pretty bad in their own right — we’ll see that factorial algorithms are obsecenely terrible in comparison!

All of this is to say: our first approach to solving TSP using brute-force recursion is probably not the best solution. Yes, it works, but it’s probably not as “right” as it could be; it could stand to be improved, and surely could be made more elegant. And, of course, it is not fast — at all!

Using brute-force takes a top-down approach to solving TSP.

So, how can we improve upon this first attempt that we made?

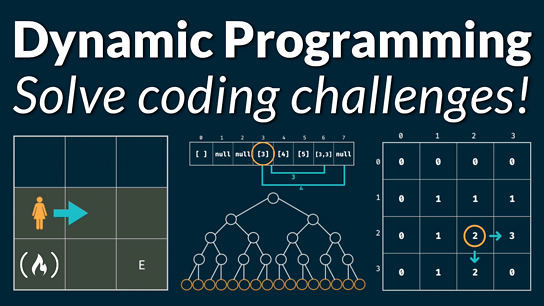

Well, if we think back to our foray into dynamic programming (DP), we’ll remember that there is more than one approach when it comes to solving a DP problem. In our initial stab at this problem, we attempted to solve TSP using a kind of top down approach: we started with a large, complex problem, and broke it down into smaller parts. Then, when we got down to our base case, and expanded the problem down to its smallest possible parts, we used recursion to build up all the possible paths that our traveling salesman could take, which allowed us to choose the best (the shortest) permutation of all the paths that we had found.

In the process, we figured out one way to solve the traveling salesman problem. But what if we approached it a different manner? What would happen if we took our top down approach and turned it upside down?

There’s only one way to find out — we have to try it out!